1. Introduction

Crowdsourcing the creation, correction or enhancement of data about objects through games is an attractive proposition for museums looking to maximize use of their collections online without committing intensive curatorial resources to enhancing catalogue records. This paper investigates the optimum game designs to encourage participation and the generation of useful data through a case study of the project Museum Metadata Games (MMG), designed to help improve the mass of 'difficult' – technical, near-duplicate, poorly catalogued or scantily digitized – records that make up the majority of many history museum collections.

2. The problem

Museum collections websites, whether object catalogues or thematic sites with interpretative content, sometimes fail to achieve levels of public usage commensurate with the resources taken to create them. Many collections websites lack the types of metadata that would aid discoverability, or fail to offer enough information and context to engage casual or non-specialist visitors who find themselves on a collection page. As Trant (2009) found, the "information presented is structured according to museum goals and objectives," and the language used is "highly specialized and technical, rendering resources inaccessible or incomprehensible".

This is not a cheap problem to solve. As Karvonen (2010) says:

Digitising museum objects is expensive. The physical characteristics of museum materials make them unsuited for mass digitising, and because of their uniqueness, creating descriptive metadata for museum objects is a painstakingly slow process.

3. Is crowdsourcing the solution?

At least part of the solution lies with crowdsourcing. First described in Wired (Howe, 2006) as "using the Internet to exploit the spare processing power of millions of human brains", crowdsourcing has been adopted by various cultural heritage projects, led by two influential projects, steve.museum and Games with a Purpose (GWAP).

The steve.museum project showed that the public were interested in tagging art objects, and that the resulting content was beneficial, meeting a real need. Researchers from the project analyzed crowdsourced tags, finding that tags "provide a significantly different vocabulary than museum documentation: 86% of tags were not found in museum documentation. The vast majority of tags – 88.2% – were assessed as Useful for searching by museum staff" (Trant, 2009).

Games with a Purpose (GWAP) proved that games could bring mass audiences to computation problems. Von Ahn and Dabbish (2008) defined a 'game with a purpose' (GWAP)' as "a game in which the players perform a useful computation as a side effect of enjoyable game play". Under their terms, GWAPs always contribute to solving a computational problem and include a form of "input-output behavior". Games produced include The ESP Game, also available as the Google Image Labeler (http://images.google.com/imagelabeler/). This is a tagging game that produces "meaningful, accurate labels for images on the Web as a side effect of playing the game". The ESP game had gathered more than 50 million labels for images from 200,000 players as of July 2008.

Tagging games have subsequently been applied to art collections (e.g., Brooklyn Museum's Tag! You're it!, http://www.brooklynmuseum.org/opencollection/tag_game/), contemporary audio-visual material (Waisda?, Oomen et al, 2010) and archives (Tiltfactor, http://www.tiltfactor.org/metadata-games).

However, art museums and galleries tend to have smaller collections compared to natural history or social history museums, and as representations, artworks can be easily tagged in terms of styles, colors, material, period, content (things, people and events depicted), and can even evoke emotional and visceral responses. Art objects are also more likely to be unique and visually distinct. Social history collections, however, can contain tens or hundreds of similar objects, including technical items, reference collections, objects whose purpose may not be immediately evident from their appearance, and objects whose meaning may be obscure to the general visitor.

The makers of the original metadata crowdsourcing games, Games with a Purpose (GWAP), said:

...the choice of images used by the ESP game makes a difference in the player's experience. The game would be less entertaining if all the images were chosen from a single site and were all extremely similar (Von Ahn and Dabbish, 2004).

And yet that exactly describes the 'difficult' technical and social history objects held by many museums, as evidenced by three objects labeled 'toy' in the Powerhouse Museum collection, below. Does that mean crowdsourcing games will not work on difficult objects?

Fig 1: Models of vertical steam engines. Thumbnails under license from the Powerhouse Museum

Fig 1: Models of vertical steam engines. Thumbnails under license from the Powerhouse Museum

4. The case study – Museum Metadata Games

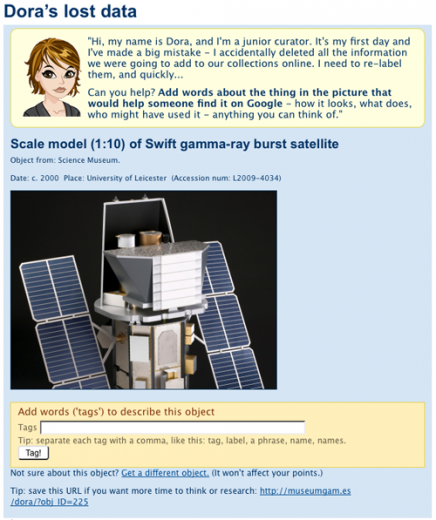

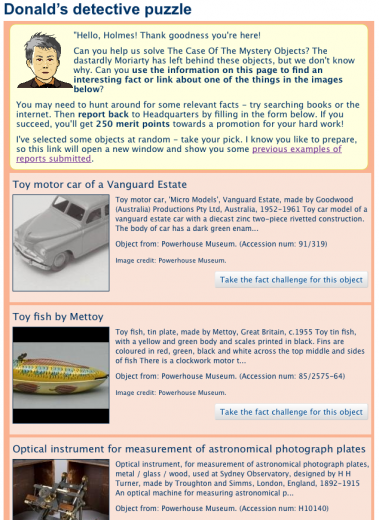

The Museum Metadata Games (MMG) (http://museumgam.es) project investigated the potential for casual browser-based games to engage non-specialist audiences with objects through game-play that would also help improve the mass of 'difficult' – technical, near-duplicate, poorly catalogued or scantily digitized – records that make up the majority of history museum collections. The study produced two games based on the mass of registered collections of science and social history museums: 'Dora', a tagging game; and 'Donald', an experimental 'trivia' game that explored emergent game-play around longer forms of content that required some form of research or personal reference.

Fig 2: The landing page for tagging game 'Dora' uses the character introduction to provide a brief back story and give the player instructions. Further instructions are provided around the data entry form. Source: http://museumgam.es/dora/

Fig 2: The landing page for tagging game 'Dora' uses the character introduction to provide a brief back story and give the player instructions. Further instructions are provided around the data entry form. Source: http://museumgam.es/dora/

Fig 3: The landing page for the fact game 'Donald' introduces a more complex game scenario and task. The players can choose their own object. Source: http://museumgam.es/donald/

Fig 3: The landing page for the fact game 'Donald' introduces a more complex game scenario and task. The players can choose their own object. Source: http://museumgam.es/donald/

A research-based persona (http://www.miaridge.com/wp-content/uploads/2010/12/Persona_Janet.pdf) was used during the design process for 'Dora'. Alongside a traditional design process, a game design workshop based on the structure and principles of a creativity workshop (Jones, 2008) was held at an early stage to generate game atoms (basic actions) and game designs. The workshop produced paper prototypes. Paper prototypes allow you to 'throw away' ideas without losing invested time or resources, to test with a range of target audiences and to explore many ideas without committing design or programming costs. Paper prototypes can also help explain the game to stakeholders.

Each game was first built as a simple activity, to establish a control for later evaluation and to help identify barriers to participation. The games were developed iteratively with build/evaluate/redesign cycles, with two main version points each. The narrative cartoon characters were created with avatar creation tools designed for social networking sites.

Building metadata games on WordPress

The games were built in PHP, HTML and CSS as plugins and themes for WordPress, a PHP-based application originally designed as a blogging platform. The plugins delivered the game functionality while themes managed the presentation layer.

The MMG plugin is available for installation on other WordPress sites. Objects can be imported for use in the game through another plugin, MMG-Import, that queries various museum Web services (at the time of writing, the Powerhouse and Culture Grid APIs) and imports the results so the new objects can be played in the games; or through custom SQL imports for collections without APIs. Github was used for version control, and will also host download links for the plugins (http://github.com/mialondon/).

The benefits of using WordPress as a platform included ease of installation and deployment of updated plugins, a page layout and navigation framework, the ability to write extra functionality in PHP as required, built-in user account management and internal data management libraries, and the availability of free plugins and themes, saving some development time. Disadvantages included the fact that free plug-ins are sometimes worth what one pays for them, and reliance on the WordPress codex to provide the 'hooks' needed.

Building metadata games with a collections API

Objects were selected programmatically on the basis of selected subject searches, without manual intervention, though the import scripts did reject objects without images. Image and metadata quality varied.

One advantage of this API use is the extensibility of the import plugin. Working in conjunction with another programmer for about four hours at Culture Hack Day (http://culturehackday.org.uk/), I was able to update the import plugin previously written to provide access to the 1.2 million records of the UK Culture Grid (http://www.culturegrid.org.uk/). This means MMG visitors can search for Culture Grid objects and import them into the games to play with them.

Results

The games were successful in engaging people in game-play and in motivating them to contribute metadata about difficult museum objects. As a rough calculation, the overall visitor participation rate was 13%, meaning 13% of visitors to the site played games.

Site visits

In the analysis period (December 3 – March 1), there were 969 visitors from 46 countries, with 1,438 visits and 5,512 page views.

Average time on site, pages per visit and bounce rate varied according to visitor intentions (game players or curious peers) over time. Overall site averages were 3.83 pages per visit, with an average visit length of 3:52 minutes. The bounce rate was 47.64%. When 'bounce' visits were excluded, the average time on site was 07:11 minutes, with 6.45 pages per visit.

The game was 'marketed' sporadically on personal social media channels (Twitter, Facebook) and discussed on museum technologist lists and sites. Interestingly, Facebook referrals show a comparatively high number of page views and longer session times than other social media visits. Excluding bounce visits, Facebook referrers viewed 8.18 pages and spent 09:59 minutes per visit. This may be because Facebook users are comfortable with notifications about new games, or because Twitter referrals from my personal network tended to be curious peers rather than game players.

Games played

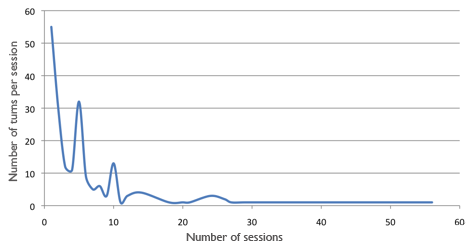

Overall, 196 game sessions were played, with a total of 1079 turns (average 5.51 turns per session); 47 users registered for the site. These 1079 turns created 6,039 tags (average 18 tags per object), 2232 unique tags, and 37 facts for 36 objects.

The highest number of turns for a single session was 56, and the average was 1.76. In the second version of Dora, the average number of turns per session was 2.34.

Objects and user content

The most tagged object was a 'Glass plate negative of view of the Shipard family at Bungowannah near Albury' (Powerhouse Museum) (http://museumgam.es/content-added-so-far/?obj_ID=672) with 76 tags and 1 fact, including tags describing the image content (named people, places, things, items of clothing), subjective descriptions, potential misspellings of given names, and image reproduction technologies.

The fact given on this object also illustrates the way in which some fact entries contain a mixture of personal and 'official' sources. Intriguingly, this entry also seems to include a reference to family history.

The most skipped object was the 'Robilt toy steam locomotive' (Powerhouse Museum) (http://museumgam.es/content-added-so-far/?obj_ID=602), which was passed by 25 players but still tagged in 9 turns. There were, however, 8 objects that did not gather any user content.

Future work on the games would attempt to resolve the issues of specialist data validation (discussed below) to create a more nuanced reward and level structure, and modify the game scenario and create more specific tasks for 'Donald'.

5. Effective game design and best practice for museum metadata games

Casual games

The growing genres of 'casual' games – "games with a low barrier to entry that can be enjoyed in short increments" (IGDA, 2009) – are ideal for most crowdsourcing games. Casual game genres include puzzles, word games, board games, card games or trivia games. Other features of casual games include easy-to-learn game-play, simple controls, addictive game-play, 'forgiving' game-play with low risk of failure, carefully managed complexity levels with a shallow learning curve and guidance through early levels, and inclusive, accessible themes (IGDA, 2009).

Casual games can bring huge audiences. The Casual Games Association states that over "200 million people worldwide play casual games via the Internet" (http://www.casualgamesassociation.org/faq.php#casualgames). A survey for games company PopCap found that "76% of casual game players are female", with 90% playing for "stress relief", while 73% identified "cognitive exercise (mental workouts)" as a reason for playing (Information Solutions Group (2006). IGDA also cited "fun and relaxation" as player motivations.

The IGDA recommends casual games should be designed so they "[l]ook like a minimal time investment" as players who feel they can leave at any time will play more. Short rounds and frequent 'closure points' can encourage players to keep playing, especially if they feel their progress will be saved. For museums, curiosity about the next object also contributes to the "just one more" feeling that increases the number of turns per session.

Getting players started

Importantly, for museums looking for content about difficult objects, the IGDA also recommends that games be clear about the knowledge and skills the players will need, establishing any mental requirements immediately by making them integral to the game. For example, establishing that the games did not expect particular expertise from players was important for the MMG games, but other games may want to include a pre-game activity designed to test for the skills or knowledge required.

Casual games should need minimal exposition and should offer immediate gratification. Directions and instructions should be very short (i.e., one sentence), and ideally the game should build any instructions or game skill learning into the flow of the game.

Providing immediate access to game-play should be the first priority of a casual game page, but this should also be supported by an appeal to altruistic motives, with a short explanation or evidence for how playing the game helps a museum. The steve.museum project suggested that 'helping out' a museum may motivate some taggers (Trant, 2009), and the MMG project evaluation found that one opportunity for museum metadata games is the ability to 'validate procrastination' – players feel ok about spending time with the games because they're helping a museum. Publicizing the use of the data gathered helps show the impact of the games in the wider world. This also helps players understand the types of content other players have provided, and was cited as a reason to take more care when entering data in MMG playtests.

Registration is a barrier to participation. Participation rates drop drastically when registration is required, and it violates the requirements for minimal time investment, instant game-play and immediate gratification. Museums concerned about vandalism or spam can record game sessions by IP address and through cookies, allowing the bulk removal of suspicious data. It is worth noting that during the two-month open evaluation period for MMG, only two spam entries were added, and no vandalism was found (though some players tried 'test' entries). Where desired, players can be encouraged to register at appropriate points through prompts such as 'register to save your score' and 'lazy registration' design patterns (e.g. http://ui-patterns.com/patterns/LazyRegistration).

McGonigal (2008) discusses the challenges of engaging mass audiences against other competition for 'participation bandwidth', and suggests designing "feel-good tasks that can be accomplished quickly and easily" to get players participating. This could include simple data validation tasks such as voting for or 'liking' their favorite player-contributed fact or story, or clicking to report tags for review.

MMG's 'Dora' game showed that a character and a minimal narrative helped players demographically close to the design persona understand their role in the game immediately. Compared to the control non-game activity, the ability of the character and narrative to invoke Huizinga's 'magic circle' might explain the lack of concern about lack of expertise or 'saying the wrong thing'.

Brooklyn Museum's game, Tag! You're It (http://www.brooklynmuseum.org/opencollection/tag_game/) is a good model for responding to player actions while presenting both a 'local', immediately achievable goal (beat the player ranked just higher than you) and a 'global', long-term goal (beat the highest score). High score lists could be presented geographically (by city, region, country, continent) as well as temporally (hourly, daily, weekly, monthly, etc.) Players respond differently to different types of goals, so displaying a range of potential goals helps motivate more players overall. The MMG games showed that score tables can strongly motivate some players, but the games were also designed to work for players who aren't motivated by competition.

Building for pyramids of participation

Writing about mass participation projects, McGonigal (2008) states that participant types vary according to "what the participant wants, and how much the participant is willing to contribute." Other important factors specific to museum metadata games are matching the abilities of the participant – their skills, knowledge and experience – to the activities offered.

Cultural heritage crowdsourcing projects seem to demonstrate similar 'power laws' in participation rates. Both Waisda? and steve.museum found that a small number of users – 'super taggers' – contributed the majority of content (Oomen et al. 2010, Trant 2009). The MMG project showed a similar pyramid of participation (below). The majority of sessions comprised fewer turns, but there was a 'long tail' of a smaller percentage of players with a high number of turns per session.

Fig 5: Number of turns (Y axis) by number of sessions (X axis)

Fig 5: Number of turns (Y axis) by number of sessions (X axis)

Varying participation rates and levels of commitment can have important implications for design. McGonigal (2008) suggests including "micro-tasks or one-off tasks requiring minimal effort that individuals at the bottom of the distribution curve can successfully complete".

Building games with a purpose

Exploratory games like Donald aside, museum metadata game design should start with a clear sense of the metadata problem to be solved. What data is lacking, and is there enough information in the existing records to help players contribute appropriately? Von Ahn and Dabbish (2008) note that, particularly for crowdsourcing games, "there must be tight interplay between the game interaction and the work to be accomplished".

Most games can be specified through the non-trivial goal the players are trying to achieve, and the rules that define the allowable actions they can take to overcome the challenge. Von Ahn and Dabbish define further requirements specific to games with a purpose: the rules "should encourage players to correctly perform the necessary steps to solve the computational problem and, if possible, involve a probabilistic guarantee that the game's output is correct, even if the players do not want it to be correct." Validating the data generated through game-play is a vital part of their design process. Data validation methods include repetition of other player actions within the same game or in the suite of games. Validation activities also provide tasks to suit players at different levels in the 'pyramid of participation' (McGonigal, 2008).

Usefully for museums with limited design budgets and large collections to cover, the IDGA report recommends favoring "a variety of content over a variety of mechanics in a single game". Citing Sudoku, they report that adding similar content to the same game structure leads players to "greater feelings of mastery", as they can apply their existing knowledge of the game mechanics.

There are various arguments for and against designing for the possibility of failure. Given the issues around fears of contributing, it may be best not to make explicit failure a feature of museum crowdsourcing games. Variable levels of difficulty not only help keep the players in flow (the zone between boredom and anxiety) by varying the challenge in relation to skills (Csikszentmihalyi, 1990) but also introduce interesting uncertainty about the outcome. Variable levels of difficulty can be introduced through established game mechanics such as timed challenges.

Museum metadata games have an advantage here, as the variety of objects and record quality introduces an inherent level of randomness, creating varying levels of uncertainty about successful task completion within the game rules. As Von Ahn and Dabbish (2008) say, "[b]ecause inputs are randomly selected, their difficulty varies, thus keeping the game interesting and engaging for expert and novice players alike".

The MMG project investigated the use of content drawn from the entire catalogue and found that this approach was adequate for tagging games. However, games such as 'Donald' that ask the player to undertake a more specific task seem to benefit from tasks and activities matched with objects manually. The process of matching potential tasks to objects (or classes of objects) could also be crowdsourced. The object selection process can also benefit from data gathered in other games, by including additional content to support the catalogue entry or by dropping out objects that do not pass a threshold limit (e.g. for the number of times an object was skipped, or excluding objects that gathered three or fewer tags per turn).

Crowdsourcing projects require iterative rounds of design and testing with the target audiences to ensure they meet their content goals. Museums' metadata games face particular challenges in helping their audiences feel engaged with the objects and game-play, and able to contribute appropriate content. Play testing should be part of the project from the first moment that activity types (or game atoms) suitable for your objects and desired content are devised, and continue as game scenarios are built around the core activities. This process can also help museums understand the best way to market their game to their target players.

Iterative development and testing is vital to achieve participation mechanics that are "minimally conceived and exquisitely polished" (Edward Castranova in McGonigal 2008).

Validating unusual data

The MMG games have a simple reward structure, with a set number of points assigned to each tag or fact added. MMG playtest participants expressed a desire for a metric based on tag or fact quality, but implementing this was beyond the scope of the project. The ideal model for these games would place a high reward value on valid and unusual tags, a medium value on common tags, a lower value on tags that are visible in the object metadata, and a penalty for invalid nonsense words. As players explore the game, they will test the boundaries of the reward system with nonsense and irrelevant words, and it is important to detect these experimental forays and only reward appropriate tags.

While matching tags from independent players is a common 'win condition' that provides validation through agreement on tags about a media item; and while 'taboo' words can be introduced to encourage players to find more granular descriptions and encourage greater coverage in tagging (e.g. Von Ahn and Dabbish, 2008), this does not provide a solution for validating specialist tags or a model for validating other types of long-form content such as facts.

Tags that reference specialist knowledge are less likely to have been previously entered in the game and are therefore penalized under models that validate tags against a corpus of previous content. The more precise or specialist a term, the greater the chance it will be rejected as a nonsense word, yet previously unknown tags could contain valuable information such as specialist knowledge related to the collections; corrections of common misidentifications or stereotypes; languages other than English; or be informed by particular domain knowledge or personal experience of the objects. For example, encouraging specialist terms enabled greater coverage in the MMG playtests – an architecturally-trained participant tagged by materials and a graphic designer familiar with searching picture libraries used subject keywords.

Asynchronous manual review (where other players validate the tags applied by previous players) requires a critical mass of players or institutional resources, and introduces awkward issues of delayed gratification or penalties for points validated or rejected after the play session. Automated solutions for detecting and rewarding specialist terms without allowing players to 'cheat' the game by entering nonsense or irrelevant words would be a useful area for future research. The lack of a qualitative validation model for long-form or specialist data is a barrier to the wider success of metadata games.

New relationships with specialists

A critical mass of available objects creates opportunities for specialist metadata gathering while forging new relationships with audiences close to a museum. Von Ahn and Dabbish (2004) suggest "theme rooms" where self-selecting players can choose to play with images "from certain domains or with specific types of content", in turn generating more specific tags. Potential specialist audiences include subject specialists, students and researchers; people who have helped create or had direct experience using the objects; collectors; and hobbyists. This is one solution to the issue of specialist content validation discussed elsewhere, and also creates opportunities for different forms of cooperative and competitive game-play.

However, museums need to consider the requirements for adequate collections data as input into the games, validation requirements, specialist marketing and outreach, and the design issues around signaling the competencies required to play the games. For example, how much skill is required to recognize, classify and describe objects with specialist terms? Is the size of the potential audience large enough to justify the resources required, or do they already have access to sites that meet similar needs? If personal experience of the objects is within living memory, are there any issues with the age or technological skills of the audience?

6. A suite of museum metadata games?

The potential for a model for applying different types of games to different content gathering and validation requirements emerged during the research and development phases of MMG. Other specialist metadata projects have uncovered a similar requirement: "[h]aving a suite of games enables database managers to custom link to the most effective and appropriate game front ends for their data" (Tiltfactor, undated).

Von Ahn and Dabbish (2008) describe three GWAP 'templates' (output-agreement games, inversion-problem games, and input-agreement games) that "can be applied to any computational problem" that could be usefully investigated in this context.

Mapping collections, activities and player abilities and motivations across the models that follow could help generate ideas for suites of games designed to address different aspects of the player content and object data lifecycle.

Object cataloguing and object significance

The extent of cataloguing varies greatly in many history museums. This model shows common correlations between the amount and type of information and the number of objects of each type. The state of the catalogue will depend on institutional history, but there is an obvious opportunity for games in collections where the potential significance of an object does not match the level of information recorded about it.

| Information type | Amount of information | Proportion of collection |

|---|---|---|

|

Subjective |

Contextual history ('background, events, processes and influences') |

Tiny minority |

|

Mostly objective, may be contextual to collection purpose |

Catalogued (some description) |

Minority |

|

Objective |

Registered (minimal) |

Majority |

Table 1: Object cataloguing and significance

Refer to 'Significance 2.0' for the full definitions of registered, catalogued and 'contextualised history' records (Russell and Winkworth, 2009).

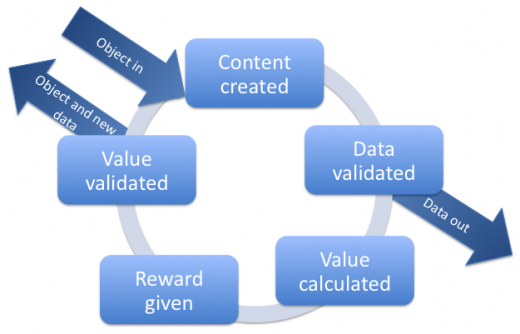

Contributed data lifecycle

The points at which player-contributed data can be passed between games as input into game-play, for validation in other games, or into another game, are outlined below.

Content is created about objects in the game; the content is validated; a game-dependent value is assigned to the content; and the player is rewarded. The value of a piece of content may also be validated (e.g. for 'interestingness') when other players show preferences for it. At this point, the object and the new content about it can be used in a new game or presented on a collections page.

For some content types, the content may be validated by players in another game after a default value has been calculated and the player has been rewarded. If the content is found to be false, the reward can be revoked.

Fig 6: Content paths into, through and out of individual games

Fig 6: Content paths into, through and out of individual games

Activity types and data generated

These activities can be applied to museum objects, and built into games through the design of rules and concepts or narratives. Data might include information available to curators if resources were infinite, and data only available to non-museum staff, including everyday descriptive language and personal experience of the subjects or objects.

The type of data input required will depend on the collection. Each activity assumes the presence of an image, or access to the original object. Potential levels of participation in various activities could be mapped to the pyramids of participation cited in McGonigal (2008) and various player motivation models (e.g. Nicole Lazzaro, Nick Yee).

| Activity | Data generated | Validation role, requirements |

|---|---|---|

|

Tagging |

Tags, folksonomies, multilingual term equivalents. General; specialist. Objective; subjective. |

Repeated tags provide validation through agreement. Some automated validation on common terms. |

|

Debunking (e.g. flagging content for review or researching and providing corrections). |

Possibly provides corrected data to replace erroneous data. General; specialist. Objective only. |

Can flag tags, links, facts for review. Should not be used on subjective personal stories. |

|

Recording a personal story |

Oral histories; contextualising detail; eyewitness accounts. Generally subjective. |

Moderation: should be handled sensitivly. |

|

Linking (e.g. objects with other objects, objects to subject authorities, objects to related media or websites). |

Relationship data; contextualising detail; information on history, workings and use of objects; illustrative examples. General; specialist. Generally objective. |

Can be validated through preference selection. |

|

Stating preferences (e.g. choosing between two objects; voting; 'liking'). |

Preference data, selecting subsets of 'highlighted' objects or 'interestingness' values for different audiences. May also provide information on reason for choice. Generally subjective. |

Can help validate most forms of data, though the terms on which items are being valued should be considered. |

|

Categorizing (e.g. applying structured labels to a group of objects, collecting sets of objects or guessing label or relationship between presented set of objects). |

Relationship data; preference data; insight into audience mental models; set labels. |

Repeated labels or overlapping sets provide validation through agreement. |

|

Metadata guessing games (e.g. guess which object in a group is being described) |

Tags; structured tags (e.g. 'looks like', 'is used for', 'is a type of') |

Successful clues provide validation through agreement. |

|

Creative responses (e.g. write an interesting fake history for a known object, or description of purpose of a mystery object.) |

Relevance, interestingness, ability to act as social object; common misconceptions. |

Consider acceptable levels of criticism from other players. |

Table 2: Activity types

These collated models provide input into future game design activities. Devising games that will produce fun, engaging experiences while generating useful data in the context of particular collections is left as an exercise for the reader.

7. Acknowledgements

With thanks to the Powerhouse Museum and Science Museum, London, for their collection APIs, the game design workshop participants, playtest participants and correspondents, and everyone who played the games online.

8. References

Howe, Jeff (2006). The Rise of Crowdsourcing. Wired 14.06. Last consulted October 8, 2010. Available http://www.wired.com/wired/archive/14.06/crowds.html

Information Solutions Group (2006). Women Choose "Casual" Videogames Over TV; 100 Million+ Women Now Play Regularly, For Different Reasons Than Men. Last consulted August 6, 2010. Available http://www.infosolutionsgroup.com/pdfs/women_choose_videogames.pdf

International Game Developers Association (IGDA) (2009). 2008, 2009. Casual Games White Paper. Last consulted December 31, 2010. Available http://www.igda.org/sites/default/files/IGDA_Casual_Games_White_Paper_2008.pdf

Jones, S., P. Lynch, N.A.M. Maiden and S. Lindstaedt (2008). "Use and Influence of Creative Ideas and Requirements for a Work-Integrated Learning System". In Proceedings RE08, 16th International Requirements Engineering Conference. Barcelona, Catalunya, Spain, September 8-12, IEEE Computer Society Press, pp 289 – 294, 2008.

Karvonen, Minna (2010). "Digitising Museum Materials – Towards Visibility and Impact". In Pettersson, S., M. Hagedorn-Saupe, T. Jyrkkiö, A. Weij (Eds). Encouraging Collections Mobility In Europe. Collections Mobility. Last consulted February 26, 2001. Available http://www.lending-for-europe.eu/index.php?id=167

Koster, Raph (2005). A Theory of Fun. Paraglyph Press, Scotsdale, Arizona.

McGonigal, Jane (2008). Engagement Economy: the future of massively scaled collaboration and participation. Last consulted June 10, 2010. Available http://www.iftf.org/system/files/deliverables/Engagement_Economy_sm_0.pdf

The Nielsen Company (2009). Insights on Casual Games: Analysis of Casual Games for the PC. Last consulted February 23, 2011. Available http://blog.nielsen.com/nielsenwire/wp-content/uploads/2009/09/GamerReport.pdf

Oomen, J., et al. "Emerging Institutional Practices: Reflections on Crowdsourcing and Collaborative Storytelling". In J. Trant and D. Bearman (eds). Museums and the Web 2010: Proceedings. Toronto: Archives & Museum Informatics. Published March 31, 2010. Consulted Feburary 19, 2011. http://www.archimuse.com/mw2010/papers/oomen/oomen.html

Russell, R., and K. Winkworth (2009). Significance 2.0: a guide to assessing the significance of collections. Collections Council of Australia. Last consulted September 9, 2009. Available http://significance.collectionscouncil.com.au/

Tiltfactor (undated). Metadata games. Last consulted 19 November 2010. Available http://tiltfactor.org/?page_id=%201279

Trant, J. (2009). "Tagging, Folksonomy and Art Museums: Results of steve.museum's Research". In: Archives & Museum Informatics: 2009. Last consulted June 10, 2010. Available http://museumsandtheweb.com/files/trantSteveResearchReport2008.pdf

Von Ahn, L., and L. Dabbish (2004). "Labeling Images with a Computer Game". CHI 2004, April 24-29, 2004, Vienna, Austria.

Von Ahn, L., and L. Dabbish (2008). "Designing games with a purpose". Commun. ACM 51, 8 (August 2008), 58-67. Available http://doi.acm.org/10.1145/1378704.1378719