Mixing Realities to Connect People, Places, and Exhibits Using Mobile Augmented-Reality Applications

Rob Rothfarb, Exploratorium, USA

http://museumvirtualworlds.org

Abstract

With mobile augmented-reality (AR) technologies and applications, museums can extend their relationship with visitors beyond physical boundaries to engage them further in discovery-based learning.

Using freely available mobile AR authoring platforms, the Exploratorium is experimenting with extensions to both its physical and Web exhibit spaces to allow visitors to interact with exhibits and with natural phenomena in the San Francisco Bay Area. Applications developed with two platforms, Layar and Junaio, allow mobile users with AR-capable smartphones (equipped with a camera and GPS) to explore their surroundings through live camera views and to interact with overlaid multilayered, georeferenced information. Interactive content “layers” let visitors access annotated information about physical exhibits and locations in outdoor spaces, interact with art installations inside the museum, and explore temporal data about objects and locations.

This paper discusses content creation for mobile AR-experience design using these two platforms, strategies for incorporating preparation of mobile AR-ready information into a digital content-creation workflow, and ways to think about measuring the effect on the visitor experience.

Keywords: augmented reality, mixed reality, mobile applications, virtual worlds

1. Blending Real and Virtual

“Someone told me that cyberspace was ‘everting.’ That was how she put it.”

“Sure. And once it everts, then there isn’t any cyberspace, is there? There never was, if you want to look at it that way. It was a way we had of looking where we were headed, a direction. With the grid, we’re here. This is the other side of the screen. Right here.”

(Writer/musician Hollis Henry talking with geohacker Bobby Chombo, character dialogue from William Gibson’s Spook Country) (2007)

The Global Positioning System (GPS), a network of linked satellites that allow terrestrial devices to receive location information, was made available publicly in 2000. Since then, many commercial and public applications that tap into the geospatial location grid have become available. Many are focused on mapping and navigation, and there are some wonderful explorations underway in using the technology for learning, communications, and the arts. As personal and mobile computing evolve and broadband networking technologies become more pervasive, more museums and other cultural institutions are experimenting with using location-awareness as a dynamic mode of interaction with visitors and with their extended communities.

One application that takes advantage of location-awareness is Augmented Reality (AR). It is the overlaying of information on to a physical object or place, as seen through an electronic display or listened to through an audio device. The overlaid information is called a Point of Interest or POI. AR is an application built on several other technologies: specifically, networking, image recognition, image processing, audio mixing, and geospatial data techniques. The factors in consumer electronics and telecommunication that are currently propelling the creation of AR tools include webcams built into desktop and laptop computers, smartphones with GPS and high-resolution cameras and displays, real-time 3-D graphics, and WIFI and 3G/4G cellular networks. Museum exhibit and experience design developers can now use a growing array of available tools to create exhibits and events with AR elements, both on the floor of the museum and for locations outside of the museum using mobile devices.

AR applications typically fall into one of two types: (1) GPS-based, in which information is overlaid onto a geospatial grid, making discreet information points accessible when a person is physically located at or near a specific location, and (2) marker-based, which uses image detection to augment a physical object or place and does not rely on GPS for overlaying of information. Markers, also called fiducial images, can be 2-D images or even full-color images and illustrations. Some hybrid systems, including the Junaio mobile platform, combine the use of GPS and markers, enhancing the accuracy of position information of the user. This is useful for indoor geolocation and placement of POIs.

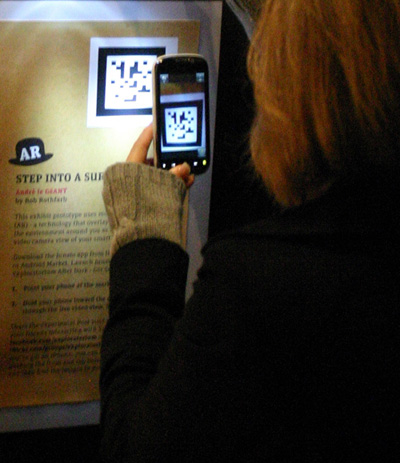

Fig 1: Exploratorium After Dark visitor scanning a 2-D LLA (Longitude-Latitude-Altitude) marker to access a 3-D augmented-reality exhibit on her smartphone

Fig 1: Exploratorium After Dark visitor scanning a 2-D LLA (Longitude-Latitude-Altitude) marker to access a 3-D augmented-reality exhibit on her smartphone

Software libraries for developing desktop AR applications have been available for a couple of years, driven by a thriving community of developers who continue to expand the capabilities of these tools. Multimedia content developers use them to create applications that are accessible to online visitors using computers with webcams. The FLARToolKit, a popular set of tools for Flash ActionScript, can be used to build AR applications that allow museum visitors to interact with virtual content using 2-D marker images. Although Flash is supported on some smartphones, the ARToolKit, from which the FLARToolKit is adapted, is available for use with mobile platforms.

In the fall of 2009, for its 40th anniversary, the Exploratorium experimented with a customized version of the FLARToolKit code to create an interactive experience. We placed a marker image in the cover design of Explore, our quarterly publication for museum members, and included the url (http://www.exploratorium.edu/40th/surprise/run/index.html)of the host of the AR application in the publication. When visited, the application activates the user’s desktop webcam and recognizes the marker when the image containing it is detected. The application augmented the live view of the marker with a celebratory birthday cake, complete with animated candles. Visitors could blow out the candles on the cake by blowing into the webcam microphone, and watch the flames flicker and disappear. As part of the GNU General Public License for the FLARToolKit, the Exploratorium has made the customized software and content for its 40th Anniversary 1969–2009: Surprise! freely available to developers at http://www.exploratorium.edu/40th/surprise/ar-code.zip.

Fig 2: A 2-D marker is recognized on the cover of the Explore quarterly publication to trigger an animated 3-D model to appear on screen

Fig 2: A 2-D marker is recognized on the cover of the Explore quarterly publication to trigger an animated 3-D model to appear on screen

Other museums are also developing and showing exhibits that include augmented reality, including the Stedelijk Museum in Amsterdam, the Andy Warhol Museum in Pittsburgh, and the Powerhouse Museum in Sydney. In the summer of 2010, the Ontario Science Center exhibited several works of AR artist Helen Papagiannis, including a work that was also available online to visitors, called The Amazing Cinemagician. In the museum, the exhibit included projections of different film clips by Georges Méliès on a fog screen. The online version allowed visitors to explore the clips in a pop-up diorama on their computer screen by scanning a 2-D marker.

2. Building Virtual Exhibits Using Mobile Augmented Reality

For creating mobile AR applications, content developers use AR software toolkits and networked services, digital content creation tools, GPS, mapping tools, and client-server scripting.

In 2009 and 2010, several toolsets emerged for creating AR content on mobile platforms. Two platforms, Junaio (http://www.junaio.com) and Layar (http://www.layar.com), are actively being developed and provide a rich set of features. Both are from commercial companies that make content creation tools and network applications available to content creators. By registering as a developer with each, you get access to mobile application services that allow you to develop, test, and publish your own AR “channels” or “layers” within their freely available browser applications. Both companies also provide code toolkits for developing stand-alone mobile apps, which, while a more involved process, offers great potential for museums to create their own mobile apps with AR features. There are other mobile AR toolsets available as well, including ones from Qualcomm and Nokia and from open source projects. Some AR platforms are specific to individual mobile devices or to devices made with certain chipsets. Junaio and Layar have the advantage of working on both iPhone and Android devices, two of the most common smartphone platforms.

The Junaio and Layar AR browsers provide the location awareness for the smartphone’s position and deliver the images, video, audio, text, and 3-D models to the device by communicating with the application service providers’ back-end systems through a cellular or WIFI network. The back-end systems request POI content and actions from the developers’ Web servers. Developers can use hosted content-management systems (CMSs) to assemble and deliver their points-of-interest content, or host their own content on their own servers. For the latter, both platforms include starter code sets to develop Web services that provide the POI content in response to location and event-based queries coming from the AR browser. Using the popular PHP scripting language and some structured database access libraries, it’s fairly straightforward to develop scalable Web services that can provide the location-contextualized POI objects that the smartphone user sees and can interact with. The Web service can pull in data from existing exhibits, Web sites, online event calendars, and other applications. Imagine scanning the horizon with your smartphone, detecting a POI for your museum, and seeing information scroll by about the current exhibitions and the day’s events.

AR on the go

The Exploratorium has recently developed an augmented reality mobile layer which is designed to engage viewers of its video series, Science in the City, with inquiry activities as they encounter locations in the San Francisco area relevant to the topics and stories in the series.

Within the Science in the City mobile AR experience, a set of POIs augment exhibits in the Outdoor Exploratorium collection at Ft. Mason Center with streaming audio from the Outdoor Exploratorium Web site. The intent is to help direct visitors in the immediate area to the location of the outdoor exhibits and to provide additional information about the phenomena that can be explored with them.

Fig 3: Augmentation of Outdoor Exploratorium exhibit Pier Piling Pivot at Ft. Mason in San Francisco using the Layar mobile platform, with streaming exhibit audio, link to exhibit Web site, and mobile navigation

Fig 3: Augmentation of Outdoor Exploratorium exhibit Pier Piling Pivot at Ft. Mason in San Francisco using the Layar mobile platform, with streaming exhibit audio, link to exhibit Web site, and mobile navigation

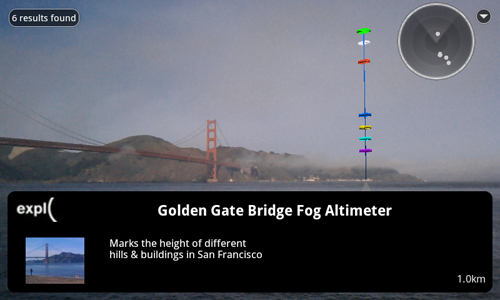

Another mobile AR exhibit follows the theme of a Science in the City episode about microclimates and allows visitors to use a 3-D model of a Golden Gate Bridge tower, outfitted with markers designating the height of different hills and buildings around San Francisco, to investigate the height of fog, if present, in the bay. The exhibit becomes a virtual instrument that visitors can use to measure the altitude of fog in the bay by observation and to learn about weather phenomena that affect fog penetration into different parts of the city – a “take it with you” tool that can be used for personal investigation.

Fig 4: Prototype version of Golden Gate Bridge Fog Altimeter POI in the Layar mobile platform, with streaming audio explaining color-coded height markers corresponding to the altitude of different local features

Fig 4: Prototype version of Golden Gate Bridge Fog Altimeter POI in the Layar mobile platform, with streaming audio explaining color-coded height markers corresponding to the altitude of different local features

Other POIs mark the spots of interesting geologic features, including the San Andreas fault, different types of rock formations, and the visible evidence of the action of blocks of rock on either side of a fault that have moved relative to each other. These POIs are designed to engage the visitor in noticing local environment features that they may not have paid much attention to or known about.

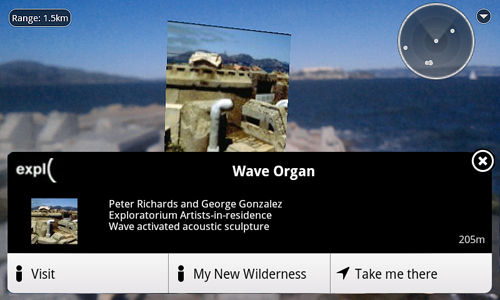

The layer includes other POIs that augment two outdoor acoustic sculpture-instruments, the Aolian Harp, mounted on the front façade of the Exploratorium, and the Wave Organ, a wave-activated acoustic sculpture on a jetty in San Francisco Bay. The AR elements provide information about the exhibits, including artist statements.

Fig 5: Augmentation of acoustic wave sculpture, the Wave Organ in San Francisco, using the Layar mobile platform, with links to exhibit Web page and to Driven: Stories of Inspiration audio slideshow series program My New Wilderness, featuring artist Peter Richards talking about his sculpture.AR in the museum

Fig 5: Augmentation of acoustic wave sculpture, the Wave Organ in San Francisco, using the Layar mobile platform, with links to exhibit Web page and to Driven: Stories of Inspiration audio slideshow series program My New Wilderness, featuring artist Peter Richards talking about his sculpture.AR in the museum

AR in the museum

The Exploratorium is also experimenting with AR in the museum. We plan to augment some exhibits with virtual features that allow further investigation of the phenomenon they demonstrate and to use AR to engage visitors at events.

On Feb 3, 2010, visitors to the Exploratorium’s After Dark monthly evening event for adults interacted with four mobile AR installations located at different points in the museum and designed specifically for the event theme. Using the Junaio AR Browser on their iPhone or Android phone, they scanned 2-D Longitude-Latitude-Altitude (LLA) markers to reveal 3-D objects with attached streaming audio and video. The theme for the event, Get Surreal, encompassed surrealism in art, music, and science; those themes extended into cyberspace through the visitor interactions with the AR elements.

One exhibit allowed visitors to recompose surrealist painter René Magritte’s painting, The Son of Man, by overlaying a 3-D bowler hat and apple over their friend’s face and taking a snapshot on the phone.

Fig 6: Magritte Me. Recomposing a Magritte painting using augmented reality at the Exploratorium’s After Dark: Get Surreal event

Fig 6: Magritte Me. Recomposing a Magritte painting using augmented reality at the Exploratorium’s After Dark: Get Surreal event

Another exhibit gave visitors a chance to recompose surrealist artist Man Ray’s black-and-white photograph Observatory Time—The Lovers, in which an odalisque figure reclines pointing to a painting with floating lips. In the AR-enhanced recomposition, we repurposed an exhibit and space where two large sodium lights affect visitors’ perception of color by rendering their surroundings in a yellowish monochromatic hue. After Dark visitors had their friends recline on a bench covered in black velvet and point up towards a seemingly empty area above their head in front of a mountainous landscape backdrop. The lips were inserted into the virtual view of the scene on the smartphone as floating 3-D objects.

Fig 7: Odalips. Recomposing a Man Ray photograph using augmented reality at the Exploratorium’s After Dark: Get Surreal event

Fig 7: Odalips. Recomposing a Man Ray photograph using augmented reality at the Exploratorium’s After Dark: Get Surreal event

At another location, visitors scanned a marker and became surrounded by a large-scale virtual sculpture inspired by Salvador Dali’s The Disintegration of the Persistence of Memory in which his prior iconic work, The Persistence of Memory, appears to be exploding into a moving grid of particles. Touching any of the cubic objects in the virtual sculpture on the touch screen of the phone triggered a surreal video to play on the device.

Fig 8: Three views of Redisintegration No. 1 augmented-reality installation at the Exploratorium’s After Dark: Get Surreal event

Fig 8: Three views of Redisintegration No. 1 augmented-reality installation at the Exploratorium’s After Dark: Get Surreal event

As each of the exhibit markers were scanned, visitors also saw and heard a tiny blue ant crawling over their phone screen, speaking in French in the voice of surrealist poet André Breton from an interview he gave in 1950 about the surrealist philosophy. At another location, a fourth marker could be scanned to reveal the ant as a 3-D giant appearing to be crawling toward the visitor – another reference to images in Dali’s work.

Fig 9: Visitor interacting with André le GéANT, an augmented-reality installation at the Exploratorium’s After Dark: Get Surreal event

Fig 9: Visitor interacting with André le GéANT, an augmented-reality installation at the Exploratorium’s After Dark: Get Surreal event

These exhibits were designed to allow visitors to be playful and to explore the mixed reality in the museum of people, exhibits, and augmented reality elements.

How to make and prepare 3-D models for mobile AR

One of the rich media capabilities of mobile AR platforms is the ability to display 3-D models, even animated ones, on the users’ smartphones. Models can help engage users with activities, games, and structured learning scenarios by providing a focal point for interaction. While real-time 3-D rendering capabilities for gaming platforms, including some mobile devices, continue to advance, mobile AR hasn’t caught up to them yet due to added challenges of needing to deliver the 3-D content on-demand and the size of detailed models. Preparing models for this real-time 3-D environment can be challenging, given these limitations, but it’s possible to make decent-looking models with animated effects. Here are some basic steps.

- Start with a 3-D modeling program that you’re comfortable with. If you’re not used to this type of digital content creation tool, choose a simple one, as tools geared toward high-end users in broadcast/gaming/film production can be very overwhelming and have a steep learning curve. Focus on tools that offer lots of modeling options and look for a polygon reduction feature – you’ll need it! Blender is a powerful open-source tool that’s freely available, but its unique interface takes a fair amount of time and study to get up to speed with. A low-cost, capable tool to consider is AC3-D from Inivis (http://www.inivis.com/). It has powerful modeling and texture-mapping capabilities, is easy to get started with, and can import and export 3-D models in many formats.

- Create models or import and modify them. There are several Web sites that offer free, Creative Commons-licensed, or low-cost models, including Google’s 3-D Warehouse (http://sketchup.google.com/3-Dwarehouse). Use tutorials that come with the program and find available online resources with more examples of modeling techniques for the software you’re using. If you have 3-D assets from other projects you want to use, you’ll need to reduce the mesh and adjust or possibly retexture the model (see steps below). You may need to convert existing models from one type to another in order to prepare them for use as POI objects. OBJ, MD2, and DAE are common formats for 3-D POIs on mobile AR systems.

- Convert meshes to triangles. Some models and tools allow you to create models using quads or spline curves. Most real-time rendering platforms, including current mobile AR rendering systems, however, require meshes to be composed of triangles. All 3-D modeling tools allow you to create in triangles or to convert meshes made from these other types of structures to triangles.

- Reduce polygons. The main difference between 3-D models created for print renderings, film, or video, and models created for real-time environments such as mobile AR platforms, are the complexity of the model meshes. The more complex the model, the more realistic it can look, given other factors such as texture mapping and lighting. To keep the file size small so that the renderer on the phone can load the model quickly, and to reduce the network transport time of the model between the server and the phone, you have to reduce the mesh complexity quite a bit. You can do this manually by combining faces in the model and using other modeling techniques, or you can use a polygon-reducer function in the modeling software or as a stand-alone tool. It’s much easier to reduce polygons inside of the modeling tool, however, because it’s an iterative process that you have to analyze the results of as you use it. As you reduce polygons, keep looking at your model from different angles. Orthogonal views and a positionable camera view are great ways to observe the effects of polygon reduction. Try reducing by 90 percent of model size and repeat the process until your model size is at your target for number of faces/vertices or file size. The basic rule is to keep reducing until you observe your model’s surfaces degrade past an acceptable point. You want to be able to keep the shape as intact as possible, and not inadvertently punch any holes in the model that could be seen by the viewer. You can also manually remove any back/bottom/top facing triangles that you’re sure won’t be viewable by the user.

- Prepare suitable texture maps. Another key difference between complex rendering applications and real-time rendering applications is the type and size of texture maps – the “skin” of your 3-D model. For mobile AR, you need to keep the dimension of these images small, typically 512 x 512 pixels and smaller, and compress them, as much as possible, to a reasonable compression level in either JPG or PNG file format. Take advantage of the texture mapping tools in the modeling program you’re using, which let you size, tile, and offset an image as a map on a 3-D object. The most economical method for texture mapping, and one used frequently by game developers, is UV or UVW mapping. This process transforms an image to a set of coordinates on a 3-D model. It’s possible to combine several texture maps into a single image file and use this technique to apply different portions of the image to different faces on the model. Another key difference between sophisticated 3-D modeling and rendering applications and real-time 3-D such as mobile AR is the ability to use virtual lights in your 3-D scene to light your model and simulate different types of lighting. Currently, in order to keep the 3-D graphics working smoothly on different hardware, the mobile AR platforms don’t support real-time lighting. So, to display models that appear to have more depth and realism, you’ll need to prelight your textures. This technique is often called “baking.” Most modeling programs allow you to render an image of a model from within the program using lights that you arrange in your 3-D scene. You can save these rendered images, slice them up as necessary, and then apply them as textures on the models. Remove any virtual lights from your 3-D scene before exporting the model, or export it without any lights.

- Package model and textures for publishing. Mobile AR platforms are also using encryption and proprietary bundling of 3-D models, textures, and texture-mapping coordinate data for content security and efficiency in loading this type of content into the AR browser. You’ll need to follow the guidelines laid out by each platform to export and prepare 3-D models to formats and files they can serve. Junaio provides a Web service for encrypting 3-D models, and Layar provides a stand-alone model-conversion utility.

Fig 10: Planning the Golden Gate Bridge Fog Altimeter 3D Model

Fig 10: Planning the Golden Gate Bridge Fog Altimeter 3D Model

Design considerations

Since AR on mobile platforms is new, and mobile devices and networks are rapidly improving, museums have many issues to address in designing AR experiences. Probably the most fundamental issue for designers to consider is that museum staff and exhibit designers do not want visitors to walk around in the museum absorbed in the mobile screen. We consider this behavior antisocial, unsafe, and a potential deterrent to visitors directly engaging with exhibits and other people in the space. Audio tours on mobile devices presented this challenge on a basic level for some types of institutions, but the proliferation of mobile personal computing, and applications that engage mobile users visually and through touch, take this challenge to the next level. Through experimentation, museums will find an appropriate balance between engaging visitors through personal communication devices in the mix of what they offer, with those offerings, such as AR content itself, being location-specific.

Interface design

Though AR platforms offer “channels” and “layers” for content developers to publish with, mobile users must discover or navigate to the information. It’s likely that these platforms will begin to include features for push notification of nearby POIs with user-selectable filtering of content type. This will help with visitors’ navigation and discovery of museum content on their devices. In lieu of that type of feature being available, content developers can take advantage of existing methods AR platforms offer for mobile users to browse through available content channels near their current location. Creating effective naming of the channel and using channel description text and icon features the platforms offer are good strategies. Some platforms also provide external hyperlinking capabilities. This allows developers to share shortcut links directly to AR channels via mobile Web and social applications. The feature does require that the mobile user already have the AR browser application installed, however. Creating stand-alone AR applications provides the most flexibility in interface design, though this approach has the disadvantage of mobile users not being able to discover museum content through location-aware features of AR browsers.

Wayfinding

One of the simple approaches to applying AR in a museum setting is wayfinding. While currently somewhat difficult in interior spaces due to the limitations of GPS accuracy inside of buildings, this difficulty will eventually ease as other methods of automatically detecting a visitor’s position inside a building become available features of AR platforms. That functionality could be based on RFID, near-field communication technologies, Bluetooth, triangulation between fixed locations of WIFI networks within museums, enhanced location detection capabilities of cellular networks, or via other methods. Currently, using AR for wayfinding in outdoor areas works well, as location accuracy within a few meters is generally acceptable. The key question in using AR for wayfinding is whether it’s as effective for visitors as other methods. A wayfinding-oriented AR application might be best suited as a tool for exploring an outdoor space such as a sculpture park, an arboretum, or a zoo, where on-screen navigation elements can indicate locations and paths to areas of interest.

Discovery and inquiry

A design philosophy key to many Exploratorium exhibits is to allow visitor to have direct contact with natural phenomena, or in the case of its exhibits in virtual worlds such as Second Life, to the simulation of the phenomena. This philosophy is directly applicable to designing experiences using mobile AR. Putting the visitors into the exhibit can be a powerful use of mobile AR as the visitors manipulate their individual points of view and interaction with exhibit features. Helen Papagiannis talks about a design focus of her AR exhibits to “heighten presence,” referring to the idea of “the illusion of non-mediation.”

One method of empowering visitors to be connected to an AR experience is to give them a challenge that they have to adapt to in some way in using the exhibit. In the case of the two surrealist recomposition AR exhibits at After Dark, the focused challenge was to align the superimposed virtual elements that floated in front of the visitor into a composition that resembled the original surrealist works and that was satisfying to them.

Fig 11: Recomposing a surreal painting using augmented reality at the Exploratorium’s After Dark: Get Surreal event

Fig 11: Recomposing a surreal painting using augmented reality at the Exploratorium’s After Dark: Get Surreal event

Incorporating interaction and play elements for two or more people to do together is another experience design method applicable for mobile AR. Group interaction can stimulate an inquiry approach to learning and can allow visitors to discover their own questions about the exhibit subject matter.

Papagiannis states that “AR fosters a great sense of wonder as a looking glass into another world and can be used to further ignite curiosity and inquiry in a museum setting.”

Some implementation issues

Using hosted content-management tools to create POIs allows exhibit developers to quickly prototype content and interactivity. The Exploratorium has made use of the Hoppala Augmentation CMS (http://augmentation.hoppala.eu) for prototyping layers with the Layar platform. Using third-party CMS tools does add another server dependency though, and potentially also identity-branding for such tools, which you may not want to introduce in the published AR experience.

Positioning of POIs is another issue. For outdoor applications, finding geocoordinates for POIs is easy. Use mapping tools such as Google Maps (http://maps.google.com), Google Earth (http://www.google.com/earth/index.html), and sites that have integrated mapping functions to easily identify latitude and longitude values; such as Get Lat Lon (http://www.getlatlon.com). Geocordinates in AR systems are typically represented in decimal degrees with four or more decimal places. Most GPS devices can display coordinates in this representation, and it’s also easy to convert from degrees, minutes, and seconds to decimal degrees using online conversion utilities. Location-based POIs positioned inside of buildings present a challenge for obtaining accurate location coordinates, however, due to the difficulty in GPS receivers obtaining stable signals from GPS satellites in those locations. Some recommended techniques for getting accurate coordinates are as follows:

- Activate a GPS receiver on a smartphone or standalone GPS device before entering a building you want to get interior location coordinates for, and allow it to lock on to satellites. Enter the building and observe the latitude and longitude coordinate values for POI locations. Once obtained, you will likely need to adjust the location coordinate values to more accurately represent a specific location.

- Use satellite views in mapping applications to determine locations for POIs relative to recognizable landscape features.

- Use floor plan overlays with mapping applications to find usable geocoordinates. Fit the floor plan graphic to the boundaries of the building as represented on the map. You’ll need to scale the floor plan graphic to the same size as the on-screen map representation.

Finding the museum content within a mobile AR browser system can be a challenge due to the in-browser ecosystem of content. The current platforms make efforts to help the user find relevant content using location information as well as through a keyword search. Location contexts display listings of content that has been defined as being geographically near the user. This is useful but can still be challenging to users in their understanding of the interfaces for getting to nearby content, especially since those interfaces also include many other features that may be new to them. Also, browsers that offer access to different methods for accessing AR content (marker-based and location-based) may present confusing interfaces for these choices. One solution to this dilemma is to roll your own applications with AR features (so users don’t have to start their experience inside of an AR browser and experience museum content in a commercial environment). The mobile AR platforms are beginning to offer these capabilities.

Fig 12: Junaio AR Mobile Browser Main Screen with Exploratorium After Dark Channel Featured

Fig 12: Junaio AR Mobile Browser Main Screen with Exploratorium After Dark Channel Featured

3. Content Assessing Visitor Interaction with Mobile AR

Methods to assess visitor interaction with AR apps and content are currently in the early stages of formation and will develop within the teaching and learning and informal education communities over the next few years as more museums and educators work with this emerging medium. Some methods that are relatively easy to implement now include direct observation of visitor use/challenges with the content and technology, interviews with visitors, gathering comments from visitors, collecting photos and screenshot images made by visitors, and gathering Web traffic statistics to content elements. Ideally, AR platforms should gather stats of channel/layer use and provide access to reporting so that developers and program staff can have quantitative data about exhibit usage. It is possible to build information gathering into the Web service the AR application queries to fetch POI data and to integrate tracking with Google Analytics and other Web analytics systems.

At the After Dark event, most visitors with capable smartphones who attempted to use the AR exhibits required little assistance to load the AR browser and to navigate to the Exploratorium channel to scan the marker images. Explainers helped some visitors get started, and many visitors helped each other with these steps, often during the time the AR browser was loading POI data on their phone.

Most visitors found scanning markers to be fairly easy. The key to scanning markers in Junaio is to hold the phone with the camera pointing at the marker so that the app detects the white border surrounding the LLA marker image. The app provides on-screen feedback when a marker is detected.

Fig 13: Visitors interacting with AR exhibit at the Exploratorium’s After Dark: Get Surreal event

Fig 13: Visitors interacting with AR exhibit at the Exploratorium’s After Dark: Get Surreal event

One interesting issue arose, however; although directions or downloading the AR browser and accessing the content appeared in the event program and on signs in the museum at each exhibit location, several visitors recognized the LLA marker image as something they could scan to get to a Web site and launched a familiar QR code reader application they already had installed on their phone to try to scan the images. With more information and assistance, those visitors were able to quickly get to the AR browser and proceed with scanning the marker images. I’d debated using QR code markers to connect people directly to the Junaio app in the Apple App Store and in the Android market, but decided against it, thinking it would be too confusing to have both QR and LLA markers for visitors to interact with, each for a different purpose. But the behavior is interesting in that it points to a growing literacy among smartphone users in recognizing 2-D markers and their connection to Web content. A consequent design challenge in using LLA and other types of markers is to provide adequate information to the visitor about the marker type and the specific software needed to scan it.

4. Mobile AR Platforms: The Future

The following is a short list of features that could enhance mobile AR platforms and offer more options for museum content development.

- Enhanced support for streaming media. It’s currently possible to incorporate Web video into POIs, both as discreet video clips and as animated textures on 3-D models. This is a great capability. Support for video applications like annotating video, multiple audio tracks, captioning, video capture, access to live streams, and sharing would be powerful tools for building AR experiences.

- Greater adoption of open standards for creating and publishing AR content. Since mobile AR is relatively new, it’s largely being driven by proprietary platforms. As the technology matures, and open standards such as HTML5, WebGL, and X3-D become more widely adopted, it’s likely that capable AR platforms that use open standards will be developed into robust systems. One effort in this area that seems promising is the Instant Reality AR/VR framework from the Fraunhofer Institute (http://www.instantreality.org/).

- Fluid connection between 2-D Web and AR content.

- Full localization to a variety of languages.

- Accessibile user-interface design to support sight-impaired users: spatial audio and accessible interfaces for content.

- Better compasses and greater GPS accuracy in devices for more precise positioning of POIs.

- Better displays to help deal with use in outdoor settings and on-screen representation of text/graphical content.

- Support for AR on tablets such as iPad, Android, and Windows Mobile.

- Gestural interface support via haptic devices and image detection.

- And, looking further into the future: wearable computing that supports AR: lightweight eyephones with stereo displays and spatialized audio, AR overlays on eyeglass and contact lenses.

Imagine a visitor entering a museum or passing by a museum exhibit at a location outside of the museum and getting a notification through her mobile device that augmented information and exhibits are available. She’s offered a direct link to download the necessary application. Seeing that the message is from a trusted source, she accepts the application link. The link is activated and the application is installed, automatically opens, and proceeds to download the museum’s augmented experience content. Once the data are loaded, the device notifies the visitor that it’s ready. She explores the space, with ready access to location and time-specific information about exhibits and events going on in the museum that day. She discovers on the floor of the museum augmented layers to some exhibits that allow her to sketch over live video views of the exhibits and of her friends playing with them. The AR application saves her annotated videos, automatically georeferences and contextually links them to the museum’s related exhibits and activities, and attaches them to her account on its community Web site. As she leaves the museum later that day, she receives an instant message with an image she made using an augmented exhibit. The image is overlaid with a note in her handwriting – a question about the colors of stars that she thought of while playing with the prism that let her bend beams of light.

5. References

Exploratorium. After Dark. Consulted January 27, 2011. http://www.exploratorium.edu/afterdark/

Exploratorium. Ellyn Hament, ed. (Oct./Nov./Dec. 2009). Explore.

Exploratorium. Exploratorium 40th Anniversary 1969–2009: Surprise! Consulted December 12, 2010. http://www.exploratorium.edu/40th/surprise/run/index.html

Exploratorium. Museum Virtual Worlds. Consulted January 12, 2011. http://museumvirtualworlds.org/?p=258

Exploratorium. Outdoor Exploratorium. Consulted December 17, 2010. http://www.exploratorium.edu/outdoor/

Exploratorium. Science in the City. Consulted January 4, 2011. http://www.exploratorium.edu/tv/?project=104

Gibson, William (2007). Spook County. New York: G. P. Putnam’s Sons.

Johnson, L., H. Witchey, R. Smith, A. Levine, and K. Haywood (2010). The 2010 Horizon Report: Museum Edition Austin, TX: The New Media Consortium: 16–19. http://www.nmc.org/pdf/2010-Horizon-Report-Museum.pdf

Ontario Science Centre (2010). The Amazing Cinemagician. Consulted December 30, 2010. http://www.ontariosciencecentre.ca/calendar/default.asp?eventid=975&ddmmyyyy=21052010

Papagiannis, Helen (2010). Augmented Stories. Consulted December 30, 2010. http://augmentedstories.wordpress.com/2010/06/04/documentation-from-ontario-science-centre-exhibition/

SparkProject. FLARToolKit. Consulted December 5, 2010. http://www.libspark.org/wiki/saqoosha/FLARToolKit/en

Cite as:

Rothfarb., R., Mixing Realities to

Connect People, Places, and Exhibits Using Mobile Augmented-Reality

Applications. In J. Trant and D. Bearman (eds). Museums and the Web 2011: Proceedings. Toronto: Archives & Museum Informatics. Published March 31, 2011. Consulted

http://conference.archimuse.com/mw2011/papers/mixing_realities_connect_people_places_exhibits_using_mobile_augmented_reality